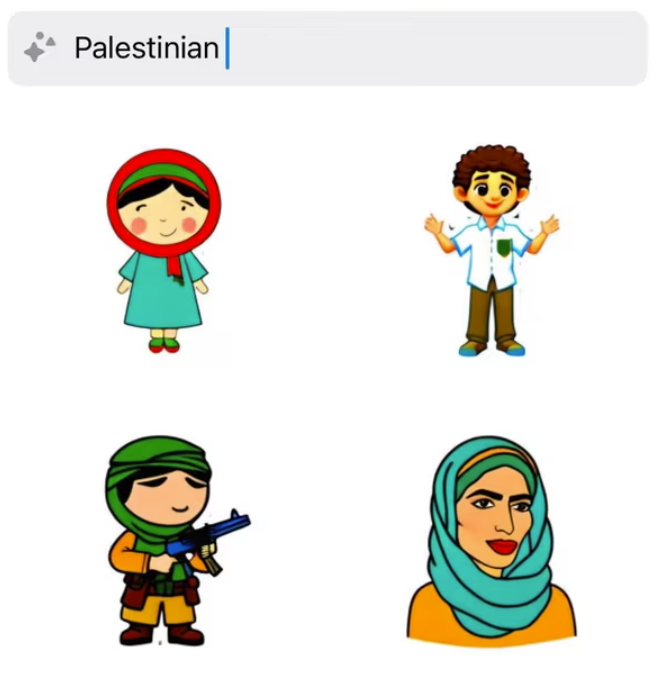

WhatsApp’s AI feature, which produces stickers in chats, shows images of children holding guns in response to prompts including “Palestine”, “Palestinian”, and “Muslim boy Palestinian”, according to British publication The Guardian.

A report published last week by the Guardian says searches related to Palestine in WhatsApp’s AI feature returned stickers of children, attired in a combination of clothing commonly worn by Muslim men, wielding firearms. However, searches for “Israeli boy” produced illustrations depicting children reading or playing soccer. Even explicit prompts for “Israeli army” returned pictures of soldiers in acts of smiling or praying without any weapons.

A Meta spokesperson told the publication that the company is addressing the issue. The AI feature is currently only available in limited countries, according to WhatsApp.

The radicalisation of Palestinian children through WhatsApp raises further questions on its parent company Meta’s practices amid Israel’s continuing aggression in Gaza, which has claimed more than 9,000 lives in two weeks. Since the start of Israel’s violent attacks, Meta has been facing complaints and criticism for its handling of pro-Palestinian content on its leading social media platforms, especially Instagram and Facebook.

The reports of pro-Palestine content being unfairly targeted by Meta have been emerging from around the world. The company has heaped up allegations that it is demoting or completely censoring image and video posts supporting Palestine, raising concerns about a potential suppression of pro-Palestinian voices online. Instagram, in particular, has become a focal point of discussions around violations of a user’s right to free speech and expression in online spaces. The ongoing instances of censorship and radicalisation have brought up Meta’s past discriminatory actions against pro-Palestinian content.

Last month, several users, who identified as Palestinians, reported that the bio on their Instagram profiles fetched the term “terrorist” when translated from Arabic to English. The insensitive insertions by the platform came to light after a content creator pointed it out on TikTok. As the issue gained momentum online and more users found the term “terrorist” in their bio, Instagram issued an apology, ascribing the “terrorist” label to a technical glitch in the platform’s auto-translation feature.

Meta is also facing accusations of “shadow banning” pro-Palestinian content, which refers to limited reach and reduced visibility. A large number of users, including those with millions of followers, complained that their posts (particularly the 24-hour “Stories”) in support of Palestine were witnessing a significant drop in views. Comments containing the Palestinian flag emoji are also being pushed to the bottom of the comments section and can only be seen upon clicking the “more” option. A report published by The Intercept cited Meta’s confirmation on the removal of the Palestinian flag emoji, which the company said it is it is taking down in “offensive” contexts violating the community guidelines.