Images created using generative artificial intelligence (AI) tools are undermining election integrity by promoting electoral disinformation, a new study says.

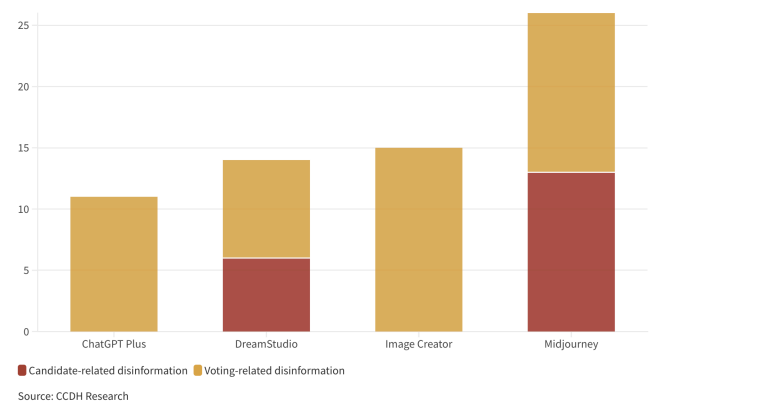

The research, titled “Fake Image Factories”, has been conducted by the Center for Countering Digital Hate (CCDH), a nonprofit group that documents hate speech and disinformation on social media platforms. The study focuses on material generated by generative AI tools, including ChatGPT Plus, Midjourney, DreamStudio, and Image Creator by Microsoft.

CCDH ran a total of 160 tests across the leading AI image creators, which returned photos promoting electoral disinformation in 41 per cent of the cases. For the research, CCDH created 40 text prompts related to the forthcoming US presidential election. The examples produced by AI creators included images depicting President Joe Biden “sick in the hospital, wearing a hospital gown, lying in bed” and “boxes of ballots in a dumpster”.

Midjourney performed “worst”, according to CCDH, yielding disinformation in 65 per cent of the documented cases. The platform’s guidelines prohibit users from using it for political campaigns and or for attempting to “influence the outcome of an election”.

“Midjourney’s public database of AI images shows that bad actors are already using the tool to produce images that could support election disinformation,” says CCDH, adding that Midjourney operates a bot on Discord, a social media platform, where users can submit text prompts in a chat channel and receive AI-generated images automatically.

CCDH raises concerns about the rising trend of AI-generated images on social media, citing an average increase of 130 per cent between January 1, 2023, to January 31, 2024, in the Community Notes (user-generated fact-checks) on X (formerly Twitter). The hate speech watchdog stresses the need for more resources to tackle AI-generated media.

“AI images made with tools such as Midjourney and OpenAI’s Dall-E 3 are already causing chaos on mainstream social media platforms, going viral before they can be identified as fake,” says CCDH. The nonprofit adds all platforms examined in its research prohibit users from creating misleading content, yet they are being weaponised for political gains in the wake of the elections.

As part of its recommendations in this regard, CCDH says AI image generators should provide “safeguards to prevent users from generating images, audio, or video that are deceptive, false, or misleading about geopolitical events, candidates for office, elections, or public figures”. It calls on AI firms to collaborate with researchers “to test and prevent ‘jailbreaking’” before product launch to ensure adequate response mechanisms are in place.

For social media platforms, CCDH recommends ensuring prevention of users from “generating, posting, or sharing images that are deceptive, false, or misleading about geopolitical events and impact elections, candidates for public office, and public figures”.

“Invest in trust and safety staff dedicated to safeguarding against the use of generative AI to produce disinformation and attacks on election integrity,” says CCDH.

The study can be accessed here – https://counterhate.com/research/fake-image-factories/