October 24, 2022 – Facebook and TikTok’s failure to tackle misinformation continues ahead of the US midterm elections, a new report has found.

The report, released by human rights watchdog Global Witness in partnership with Cybersecurity for Democracy (C4D) at New York University, highlights the continuing failure of leading social media platforms to take down advertisements laced with misinformation, particularly Facebook and TikTok.

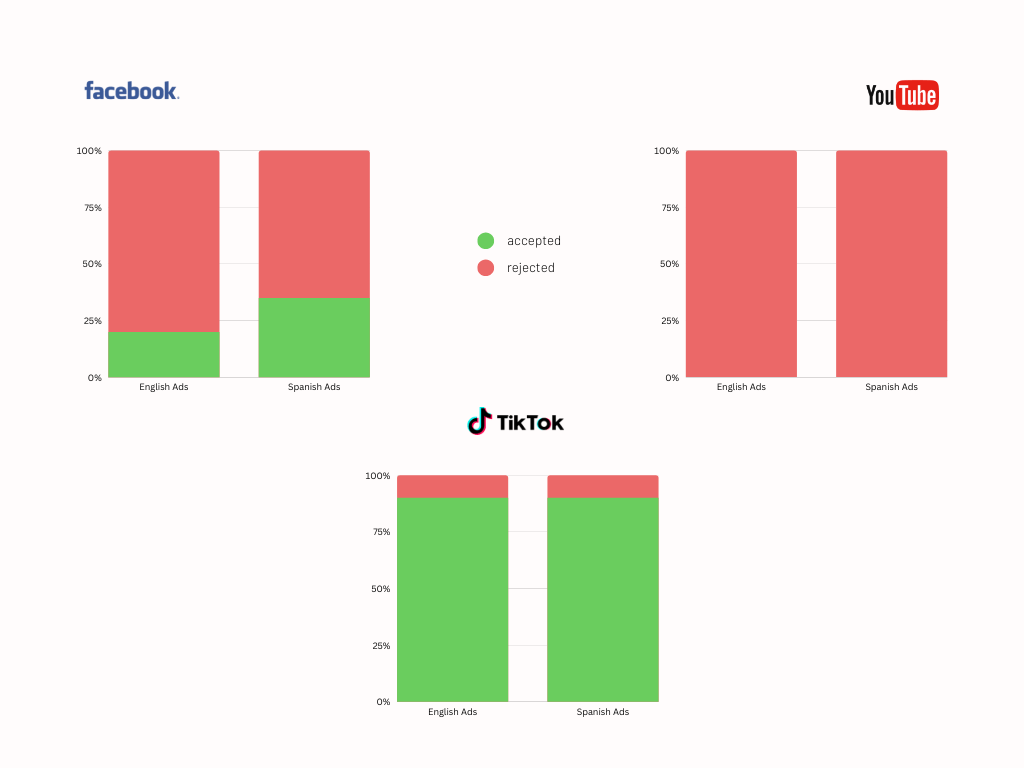

The organisations conducted an experiment involving Facebook, TikTok and YouTube to ascertain how effectively social media services catch content based on misinformation. About 20 ads with misleading information were submitted to Facebook, TikTok, and YouTube through a dummy account. The ads were written in English and Spanish languages, and contained falsehoods about the integrity of elections and incorrect voting dates.

YouTube’s detection of misinformation in the submitted material proved most effective, catching almost every misleading ad and suspending the channel used to publish the ads. Facebook and TikTok, however, yielded alarming results.

The researchers found TikTok, despite its 2019 ban on political advertising, approved about 90 per cent of the ads containing false or misleading information. Facebook, on the other hand, approved a “significant number” of the test submissions. The researchers deleted the ads before they were published.

“YouTube’s performance in our experiment demonstrates that detecting damaging election disinformation isn’t impossible,” Laura Edelson, co-director of C4D team, said in a statement. “But all the platforms we studied should have gotten an ‘A’ on this assignment. We call on Facebook and TikTok to do better: stop bad information about elections before it gets to voters.”

A Meta spokesperson responded to the investigation by saying the tests “were based on a very small sample of ads, and are not representative given the number of political ads we review daily across the world”.

They added Meta’s review process entails “several layers of analysis and detection” for both submission and publication processes.

The account used by the researchers to post misinformation was still live until TikTok was informed by the researchers.

“TikTok is a place for authentic and entertaining content which is why we prohibit and remove election misinformation and paid political advertising from our platform,” TikTok said in response to the experiment. “We value feedback from NGOs, academics, and other experts which helps us continually strengthen our processes and policies.”

In light of the results, researchers have reiterated the need for stronger content moderation across leading social media platforms.