One of the most important ways information gets to a wider audience these days is through social media platforms. In Sri Lanka, the number of people who consume, and participate in, social media continues to rise. Most people who have access to a smartphone also have internet access and are actively engaged in some form of social media. These mediums have surpassed print, radio, and television as the primary means by which the public learns about important issues and events.

Sometimes, though, rioters are inspired by internet-based hate speech to physically harm members of minority groups. Even though the conflict in Sri Lanka concluded in 2009, many of the underlying problems between the Sinhala and Tamil people in Sri Lanka have not been addressed. Meanwhile, tensions that might lead to Islamophobia have been growing since the Easter bombings this year.

Hate rising

In tandem with the rise in internet and social media use in the country, hate crimes and speech incidents have proliferated on online forums. The popularity of social media has skyrocketed over the past several years, allowing millions of individuals to connect with one another. Due to the proliferation of mobile phones, access to social media has quickly extended across the country, even though it is more popular in metropolitan regions. While just about 10 per cent of Sri Lankans had access to the internet in 2011, by January 2021 that number had risen to 50.8 per cent. A further rise in internet usage can be attributed to the Covid-19 pandemic. By the beginning of 2021, Sri Lanka had 10.90 million online users. If we compare this to 2020, it represents a 7.9 per cent gain (Data Portal, 2021).

In this context, it has become clear that the use of social media platforms is a significant reason for the rise in violent crime in recent years. Religion or belief has been used as a weapon of prejudice and violence in Sri Lanka on multiple occasions in recent years. Abuse of religion or belief has persisted because of a lack of clear action to address its causes.

Concerns have been expressed throughout South Asia regarding the ability and obligation of platforms like Facebook to police their use by a population numbering in the tens of millions and posting in dozens of languages and hundreds of dialects.

The fact that traditional regulations and laws are not enforced and are inadequate in the face of this new generation of threats only makes the situation worse. Misinformation, hate speech, incitement to violence, racism, and the spread of extremism, gender-based violence, and sexual exploitation have flourished in the unchecked environment provided by digital platforms like Facebook.

Facebook and riots

The part Facebook played in the anti-Muslim riots that took place in Digana, Sri Lanka in 2018 is a great example of how the media giant miserably failed to conform its community standards and take remedies on time.

After the death of H.G. Kumarasinghe, a 41-year-old father of two, who died from head injuries he received in an attack in March 2018, racism surfaced in Digana and Teldeniya. Fears of renewed racist campaigns and violence targeting minority communities were stoked by this one incident, despite the fact that it had nothing to do with racism. Many people in Sri Lanka used the increasingly influential Facebook to spread rumours and paint a racially charged picture of the events.

A number of fatalities were reported by the evening’s end. As a result of the fire, dozens of stores were destroyed, and many people were injured or killed. All schools in the Kandy District were closed, and the police imposed a curfew on the city of Digana. As a result, the government dispatched over a thousand police officers, 300 members of a special task force, and 300 army troops to Kandy to restore order and avert a major incident between various religious factions.

Similarly, a video that went viral on Facebook sparked unrest in Ampara, where people believed that a pill that causes infertility had been mixed with food served at the New Cassim Hotel, a modest local restaurant in Ampara. Subsequently, the Government Analyst’s Department confirmed that samples of particles found in food sold at the eatery did not contain sterilizing chemicals as alleged but were merely clumps of flour, putting to rest the speculation that such a pill had been mixed with food in the Ampara hotel. But the subsequent violence, fueled by entrenched racial bigotry, swept from Ampara into central Kandy, where it claimed the lives of two people and left several others injured while also wreaking havoc on property.

The New Cassim restaurant cashier, Ahamed Farsith, is still looking shocked and confused. Farsith, a 28-year-old single man, is afraid to venture out into the city by himself because he does not speak Sinhala, the language spoken by the vast majority of the population. “Everyone told me that I confessed to putting infertility pills in the food in a video posted on Facebook. Almost immediately, the clip was shared thousands of times across Facebook. Because of something I had nothing to do with, I was publicly humiliated and subjected to severe criticism. As ‘Farsith’ put it, “I had never heard of ‘wandapethi’ (sterilization pills) until the day the mobs descended on my restaurant.”

“The guest at my hotel who had been eating curry approached me, showing me what he claimed to be particles from the curry and asking me to identify them. Farsith says: “When he asked me again, I told him to ask the hotel worker, since my Sinhala isn’t very good. “Damadha, damadha wanda pethi? (did you put infertility pill? he asked me). I seriously feared for my safety. Several men had their phones out and were filming me. Remembering those times, Farsith reflects, “I felt like I had no choice but to agree to whatever the men asked of me. After the Facebook live videos, a swarm of new people surrounded my hotel”. These people then started destroying Farsith’s hotel and later on many hotels, mosques and houses in Ampara.

Similarly, in 2014’s anti-Muslim riots in Aluthgama, Twitter played a crucial role as well.

The damaged New Cassim Hotel on D.S. Senanayake Street in Ampara town

Gate of one of the vandalized mosques

Facebook ignored repeated pleadings

The Sri Lankan government blamed the social media platform for the anti-Muslim riots that occurred in March 2018, resulting in three deaths and placing the country under a state of emergency. In 2018, during a wave of violence against Muslims, the Sri Lankan government temporarily blocked access to Facebook. Although it seemed like a radical move against new technology, it was a last resort in the eyes of the government. The deadly anti-Muslim riots this year occurred after years of calls from government and civil society groups for Facebook to regulate ethno-nationalist accounts that spread hate speech and incited violence.

Authorities, academics, and local NGOs claim they have been pleading with Facebook since at least 2013 for the company to do more to prevent its platform from being used to incite violence or discriminate on the basis of religion or ethnicity. They brought it up with Facebook executives on multiple occasions, both in one-on-one settings and in open forums where they shared their extensive research. They state that the business did very little in response.

Facebook, Instagram, and WhatsApp were among the services blocked by the government, which claims it did so to stop the violence from spreading. Authorities claimed they couldn’t have faith in Facebook to remove violent content quickly enough. The Center for Policy Alternatives (CPA) wrote a public letter to Facebook in which it asserted that it had documented hate speech in Sri Lanka for the past four years. Over the course of several years, Senior Researcher Sanjana Hattotuwa has presented these reports to multiple representatives from Facebook, each time having them translated into Sinhala and Tamil.

These concerns were also voiced openly and directly with Facebook representatives at the December 2017 Global Voices Summit, during interactions with Facebook held at the Government Information Department, and at the June 2017 Digital Disinformation Forum hosted by the National Democratic Institute for International Affairs and the Centre on Democracy, Development, and the Rule of Law at Stanford University. There hasn’t been a single submission that’s gotten a serious response that’s actually investigated the issues raised by the evidence and data provided.

Ineffective reporting option

Facebook moderators, who are located in a number of different cities around the globe, rely heavily on reports from other users in order to identify and remove potentially harmful or offensive content. When a post is reported, a Facebook moderator examines it to determine if it violates the site’s community guidelines.

Muslim locals in Digana, Sri Lanka, however, claim they frequently report anti-Muslim posts to Facebook, only to have the company rarely remove them. Some of the Sri Lankans we spoke with also mentioned being a part of large, loosely organized social groups whose sole purpose was to report abusive posts to Facebook in the hopes that the company would remove them. After being asked whether or not they were right, Facebook did not provide a direct answer.

Images captured from Facebook posts made in the days leading up to and during the violence show extremists inciting fear of Muslims and calling on fellow Buddhists to target them. The dates on the screenshots indicate that the content remained on the site for days after being reported.

It’s unclear whether or not the social media blackout helped; at the time, Google searches for “VPN” increased in Sri Lanka, and extremists continued to post to Facebook alongside government officials, intellectuals, and the media. Supporters of online liberty argued that the government shouldn’t try to solve the problem by shutting down social media platforms, but that Facebook should have simply enforced its rules on hate speech.

Officials and civil society groups said it was the highest-level Facebook delegation that had ever visited on official business, and the company sent a delegation of policy officials to the country almost immediately after Facebook, Instagram, and WhatsApp were blocked.

Facebook however did not provide a direct response when asked why it had not implemented its policies regarding hate speech in Sri Lanka despite repeated requests over a long period of time.

Is the language a real problem, or just an excuse?

Human rights advocates and activists believe that rather than shutting down access to social media sites, policymakers should consider enacting laws and regulations that hold internet companies and users accountable for human rights abuses. As an added complication, content platforms such as Facebook have come clean about their inability to moderate Sinhala content effectively.

While Sinhala is the national language of Sri Lanka, officials and internet freedom advocates there believe the company applies its hate speech standards more consistently and stringently to content in English. Due to a lack of Sinhala-speaking moderators, the company has done too little to deal with hate speech when it appears in Sinhala.

A user tweeted that they had reported a Sinhala Facebook post that read, “Kill all Muslims, don’t even let an infant of the dogs escape.” According to the user, he received a response six days later stating that the post did not violate a specific rule of the Facebook community.

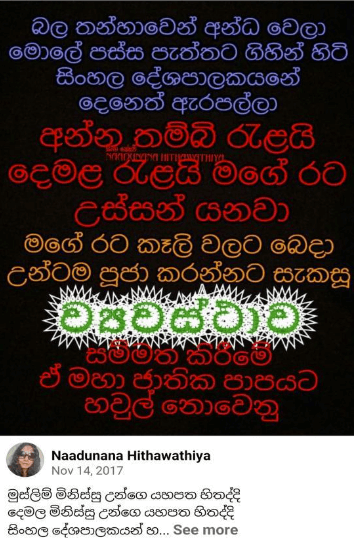

Following is a small selection of the many Sinhala posts originally published by extremist groups as far back as 2012. These posts continue to be accessible via Facebook despite the fact that numerous users have reported them for spamming or other offences against the platform’s community guidelines.

Post translation: ‘Corrupt greedy politicians, open your eyes! Muslim and Tamil gangs are stealing my country. Don’t support a new constitution that separates my country to pieces’

Post translation: ‘This is not Arab. This is Diyatha Uyana in Battaramulla, Sri Lanka. Share this across for everyone to know what is happening to the country’

Post translation: ‘These are the Halal banks that steal money of Sinhalese and make Muslims rich with that money’

Caption translation: ‘We are already very late. Let’s get together to eradicate extremist Muslim cancer from Sri Lanka’

Post translation: ‘Sri Lanka will soon become a Muslim country? If actions are not taken, this is the end of our race’

Reporting to Sri Lankan authorities

While the law in Sri Lanka protects everyone’s right to practise their faith without interference, there are still obstacles to this freedom being fully realized. Respondents interviewed for this study also mentioned that they had complained about Facebook harassment to CERT, the Sri Lankan government’s advisory body on cyber-security. In response to their concerns, CERT suggested they contact Facebook directly. In that case, as Mohomed Raslan of Digana put it, “you are stuck in a loop and not getting the kind of support you need.”

Minority Rights Group International (MRG) released a report titled ‘Confronting intolerance: continued violations against religious minorities in Sri Lanka’, which details the ongoing persecution of Christians and Muslims in the country. MRG notes that there are still significant shortcomings in the use of the law to punish those responsible for acts of religious bigotry and persecution.

Even though the Sri Lankan constitution guarantees the right to equality, nondiscrimination, and freedom of religion and religious worship, many of the reported cases show that police or government officials either participated in violations of religious freedom or failed to protect victims. A member of the Jummaha mosque’s administration committee in Dambulla named S.Y. Muhammad Saleem claimed that anti-Muslim screeds posted on Facebook were responsible for multiple attacks on the mosque. “Two petrol bombs were thrown into the mosque, making this the most recent attack on the building. Buddhist extremist groups campaign every day saying Islamic extremists must be exterminated and, if not, worse conflict could occur in the future,” he said.

According to Saleem, he and other Muslims have received death threats via Facebook, prompting him to report the incident to the authorities. After “we respond on Facebook against their narrow views,” he says, “we see 40-50 well-organized reaction comments, slinging mud at us from extremist Buddhists.”

What to do now

Facebook later acknowledged it made mistakes in not removing racial hatred posts in Sri Lanka, which may have stoked tensions and led to violence in Digana in March of 2018. According to a statement provided to Bloomberg, the social media giant “deplores” the misuse of its platform and expresses regret for the “very real human rights impacts that resulted.” But the already-present widespread damage, strained relations between communities, and rise in Islamophobia have fueled tensions even more.

Facebook representatives visited Sri Lanka in July 2019 and said the company has heavily invested in Sinhala and Tamil language experts. Facebook has not, however, issued a statement regarding whether or not it is establishing such a mechanism with Sri Lankan authorities. There was no immediate comment from the tech giant, and former president Maithripala Sirisena did not specify what, if any, concrete commitments Facebook had made.

According to a report by Groundviews’ editor Sanjana Hottotuwa, a survey of 465 Facebook accounts revealed content that portrayed Sinhalese Buddhists as “under threat” from Islam and Muslims and “consequently in need of urgent and if necessary violent pushback.”

The riots in Sri Lanka in 2018 are an example of the dangers posed by social media platforms that are not adequately policed and therefore endanger the most defenseless members of society. A prominent Sri Lankan sociologist says that Facebook and other social media networks must assume some level of accountability for the content they publish. Platforms need to adopt systems that can verify sources, photos, or information because “the mishandling of misinformation and fake news can result in disastrous consequences.” Investments need to be made in the moderation of posts that could potentially have life-threatening consequences if viewed by the wrong eyes, he says, and the platform must immediately remove all hate speech.

This report is part of DRM’s exclusive journalism series exploring Big Tech’s failure to contain hate speech and lack of corporate accountability across Asia.